About Our Project

Real-Time Exercise Guidance Using Motion Modeling

Overview

Imagine a system that assists users with their workouts by providing instant feedback on their exercise movements. The goal is to create a system capable of detecting and analyzing body movements during exercises using a webcam. This system should visually represent the user's body using lines and key points, while offering constructive feedback to correct or improve exercise form.

The Challenge

Develop a Python application that leverages a webcam to capture user movements and applies motion modeling techniques to display key points and body lines in real-time. This system must automatically detect and analyze the user's exercise movements, identify weaknesses or mistakes, and offer corrective feedback.

Inputs

- Webcam Feed: Real-time video input from the user's webcam.

- Exercise Data: Specific movements and postures requiring analysis.

Outputs

- Visual Display: Real-time visual representation of the user's body with lines and key points indicating posture and movement.

- Feedback: Automated suggestions or corrections to enhance exercise form based on detected movements.

Sample Inputs

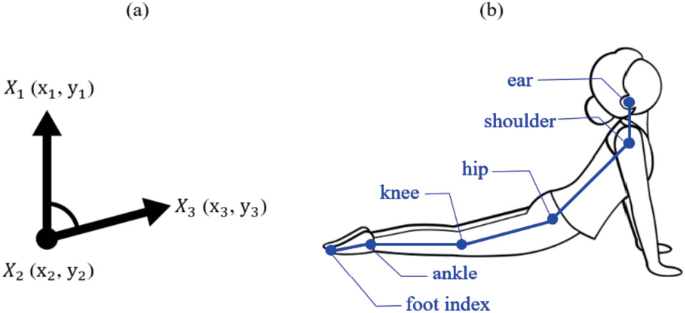

- Pose Detection: Utilizes MediaPipe Pose for detecting and tracking human poses.

- Webcam Feed: Captures live video of the user.

- Visual Display: Key points and connections are drawn on the user's body to illustrate their posture.

- Feedback: Includes analysis and suggestions for improving form based on key points.

Why It Matters

- Immediate Feedback: Provides on-the-spot corrections to ensure safe and effective exercises.

- Enhanced Performance: Helps users improve their technique, leading to better workout outcomes.

- User Engagement: Engages users by turning exercise sessions into interactive experiences.

Overview of the App

This app is a real-time exercise feedback system that uses a webcam to detect body movements and provide corrective feedback. It uses the Pose MediaPipe module to perform landmark and angle recognition calculations on recorded videos or live webcam feeds.

Key Features

Upload Video and Extract Reference Angle:

- Users can upload a video of their exercises.

- The app processes this video to calculate reference joint angles using the cues specified by MediaPipe.

- These reference angles serve as the basis for evaluating live movements.

Real-time Exercise Evaluation:

- The app takes live video from the user's webcam.

- It calculates joint angles in real-time and compares them to reference angles.

- The difference between the live angles and the reference angle is calculated as the error.

Motion Detection:

- The system detects whether the user’s movements match predefined exercises (e.g., curls, squats) based on joint angles.

- Motion detection is performed by checking whether the calculated angles fall within the specified ranges for each exercise.

Feedback and Evaluation Results:

- Provides visual feedback and evaluation results indicating reference discrepancies.

- Users can view these results via an API endpoint, which returns the analysis in JSON format.

Web Interface

- Index Page: Gives users access to the main functionality, including video uploads and live evaluation.

- Upload Form: Users upload their reference exercise videos.

- Results Page: Displays feedback and suggested improvements based on live analysis.

Key Components

- Flask Backend: Handles routing, file uploads, and initialization.

- MediaPipe Pose: Performs landmark detection required for motion analysis.

- OpenCV: Used for video capture and processing.

- Numpy: Helps with computation, especially for angle finding and averaging.

- Threading: Enables concurrent execution of the live assessment process without blocking the Flask server.

Usage Steps

- Upload a Video:

- Users upload a reference video that demonstrates correct exercise form.

- Reference angles are extracted for later comparison.

- Start Live Assessment:

- After setting up a reference, users can start a live assessment session.

- The system takes real-time webcam input to calculate and evaluate angles.

- Receive Feedback:

- Users receive analytics feedback on their live performance compared to the reference angles.

- The application detects movements and suggests corrections if necessary.

Challenges

- Real-time Processing: Efficiently manage webcam feeds to provide immediate feedback.

- Accurate detection and analysis: Using reliable joint angles and movement pattern recognition to guide user corrections.

This solution enables real-time analysis of exercise form and provides valuable immediate feedback to users with the aim of improving exercise effectiveness. This implementation combines visual computing and feedback systems to support training and exercise correction.